Research

Here is the research and code that went into making Flatland work

Player Position Tracking

GPS

The unit’s built-in GPS has a tracking accuracy of about three meters and a refresh rate of once every few seconds. For our game we needed accuracy down to a few feet and a refresh rate of multiple times per second; additionally, the unit would often lose its connection to the satellites, leaving players without a position until the connection could be reestablished. Thus it was determined that the GPS would not be precise enough.

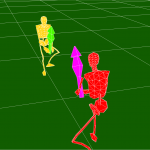

Color Tracking

In this system, each player wears a unique color that is visible and trackable from a camera attached to a balloon above the field. At the beginning of each play session, we would calibrate the player profiles by viewing the video feed and assigning each color to a player. The system records the color value of that pixel, which becomes associated with the player. During each frame of play, the system searches the neighborhood of the last recorded position of the player and finds the pixel that most closely matches the color value assigned to the player. It then finds a “blob” of surrounding pixels with similar color values (the RGB value is treated like a point in 3d space, and the system finds other pixels within a certain “distance” of that point), and creates a bounding box around the center pixels. This box constitutes the player’s position on the field. Even if lighting conditions change (e.g. if the player goes into shadow), the “blob” can still be tracked.

The frames are copied from the video feed into an OpenGL context, where the pixels are also analyzed. We were able to track up to 10 unique colors, even colors with similar values (e.g. dark orange, light orange), at a range of 60 fps or greater. But the color tracking system was not ideal because it put constraints on costume design, making it impossible for all players on a single team Ai??to wear a common color as the costume designers had planned. Technically, it IS a working method, but we’d prefer to find a method that doesn’t limit costume design.

WiFi Tracking

The idea of gathering location data from wireless signals has been explored in a number of academic papers. The idea is that a number of wireless nodes with known locations would be placed at the four corners of the field. Each node would periodically send pings to each player on the field and measure the round trip time. Since radio signals travel at the speed of light, we could calculate the distance between each node and the player. This data could then be used with multilateration algorithms to calculate a player’s position in 2D or 3D space.

The main hurdle to this approach is accuracy. Of the papers cited above, the most accurate was still only to within two meters. For our game, we would prefer to be below one meter. In order to get better accuracy, we would need very precise clocks reporting the round trip time of data. Unfortunately, the hardware listed as acceptable a few years ago Ai??is either no longer available or has been updated with new chipsets which no longer give the accuracy required. Another paper used custom hardware to sniff the lines of a PCMIA card in order to gather accurate timing data. This, unfortunately, was out of the scope of our software developer’s expertise.

IR Tracking

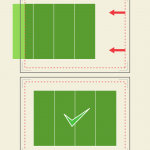

Like the color tracking system, the infrared tracking system also uses the balloon and camera. Instead of tracking certain colors, however, this system tracks infrared light emitted by devices embedded in the player costumes and places on the four corners of the field. The system uses the field corners to help the camera stay properly oriented – if wind causes the balloon to drift slightly in any direction, a servo in the camera mount will pan or tilt the camera in order to keep the entire field in view. In the case that this causes a distorted perspective view of the field, the system will automatically adjust the input to maintain correct information on player positions.

The disadvantage of this system in comparison with the color tracking system, of course, is that each player is marked with the same color of light. One way to differentiate is by specific contours of how the infrared light is emitted (i.e. the shape of the infrared emitters placed on the costume); but the system can also check on specific players when necessary. It achieves this by momentarily turning off one player’s set of emitters, then verifying which infrared signal temporarily disappeared.

This system uses a PS3Eye camera with a CL-Eye driver. The camera records good-quality images with high resolution, all at a higher frame-rate than most USB cameras offer. This driver delivers raw, high-quality visual data without any processing in between.

For the actual tracking of the players/field corners, we are using an open-source program called Community Core Vision. This program is actually designed for tracking hand/finger movement on multi-touch surfaces; but instead of tracking finger tips from below a surface, we are tracking spots of infrared light from 100 feet above the ground.

Gesture Recognition

Players must preform specific motion gestures with their scepters to preform various actions in the game. To accomplish this we use the accelerometer from a Wii Nunchuck attached to the Nokia N810 through an Arduino.

The technique used for gesture recognition is broken down into these steps.

- wave the scepter around and measure the range of each axis

- quantize the full range into fewer regions to make pattern matching easier.

- record the gestures we want to match by having a variety of people preform them while recording the data (in the quantized form).

The data that we want to capture is not the actual sequence of motion in the time series but rather the probability that the current vector will move from one quantized region to another. Each pattern can be differentiated by comparing their transition probabilities. By sampling multiple people and averaging the data we come up with a general signature for each of the gestures. It will be up to the players to adjust to this generalized pattern from there.

Game Software

Python was chosen, both for ease of development, as well as ease of deployment. Maemo comes with both Python and PyGame, which we used for the graphics engine in our game.

Networking

We chose Twisted as our networking API. Trying to keep many clients synchronized is easy with Twisted’s perspective broker (http://twistedmatrix.com/documents/10.2.0/core/howto/pb-intro.html). It also has the benefit that it is written completely in Python, so there weren’t any build issues putting it on the Nokia tablet. Using perspective broker, Python objects can be sent across the network and kept in sync with each other using a minimal amount of logic.

Game Logic

The rules of the game are enforced on the server side. This was done to cut down on calculations done on the tablets. Tablets are simple devices which transmit any actions the player is performing, as well as displaying the game field. The server will calculate the position of each player and update them, as well as keep track of player health, and perform building logic.

Here’s a link to the project repo: https://github.com/uclagamelab/flatland-arg